How to Choose the Right MIPI Camera for Your Project

- Vadzo Imaging

- Dec 30, 2025

- 7 min read

Choosing the right MIPI camera for an embedded project isn’t as simple as picking the highest resolution. Engineers often face hurdles, from mismatched bandwidth and lane configurations to driver compatibility, shutter artifacts, and even cable length limits. These challenges can turn a promising prototype into a difficult integration task.

To make selection easier, we’ve outlined a clear, step-by-step flow that helps you choose the right MIPI camera based on real engineering priorities. Resolution → Frame Rate → Application → Number of Cameras → Host Platform → Distance. Follow this sequence to ensure your camera setup performs reliably from lab to production.

1. What is the required Resolution?

Start by quantifying your spatial requirement: what’s the smallest object or feature you must resolve at your working distance?

Convert that requirement to pixels per object and then to sensor dimensions (horizontal/vertical).

Example: To resolve a 0.1 mm feature across a 100 mm field of view, you need about 1000 pixels across, roughly a 1 MP horizontal sensor (with practical margins, target 2–4 MP).

High-precision metrology and pathology often need 8–20 MP sensors, while UI, kiosk, or general video applications typically work well with 2–5 MP.

Trade-off: Higher resolution increases per-frame payload; it may force lower FPS or require additional MIPI lanes. Plan for ROI, binning, or tiling if needed to optimize performance.

Tip for engineers: Before locking in the sensor, estimate your required field of view and resolution using Vadzo’s FOV Calculator. It helps you map object size, working distance, and sensor dimensions to ensure your camera selection meets your real-world imaging needs.

Verification action: Compute pixels/frame = width × height and confirm downstream buffers and storage can handle sustained capture (see bandwidth check formula below).2. What is the required Frame Rate?

Frame rate sets temporal resolution and control-loop latency.

Slow scenes (user interfaces): 15–30 fps.

Video/telemetry and many vision tasks: 30–60 fps.

Motion capture, robotics, or high-speed AOI: 60–120+ fps (use global shutter or short exposure modes).

Rule of thumb: latency budget = capture interval + ISP processing + pipeline + inference + actuation. If your control loop needs 50 ms end-to-end, the capture interval should be <10–15 ms (≥60–100 fps) to leave headroom.

Verification action: Prototype with real motion and measure capture-to-action latency.

3. What is the Application? (Shutter, Spectral, Optics)

Application drives shutter type, spectral response, optics, and illumination design.

Moving scenes (conveyor/robotics): use global shutter sensors to avoid rolling skew/distortion. Global shutter is standard for high-speed machine vision.

Static/slow scenes (inspection, microscopy): rolling shutter can reduce cost and power.

Low light / NIR / fluorescence: choose sensors with high quantum efficiency (QE) and ensure optics and filters match the spectral band. Many silicon sensors respond ~350–1050 nm, remove IR cut filter for NIR tasks.

Color requirement: if you do color-based classification, use color sensors; otherwise, monochrome often gives better SNR and edge fidelity.

Verification action: Define whether you need global shutter and confirm sensor datasheet (global vs rolling) and QE curves.

4. How many cameras need to be connected?

Multi-camera systems impose aggregate bandwidth, synchronization, and physical constraints.

Small systems (1–2 cams): easiest — allocate dedicated CSI lanes where available.

Stereo / multi-view (3–8+ cams): either use multiple CSI ports on SoC or use SerDes (FPD-Link/GMSL) to aggregate multiple sensors to a deserializer near the host. NVIDIA Jetson platforms often support multiple CSI ports or can be expanded via deserializer boards.

Synchronization: for frame-accurate capture, use hardware triggers (GPIO trigger) or broadcast sync (IEEE-1588 for networked cameras). Software sync will not give frame-perfect alignment.

Verification action: List simultaneous worst-case streams (pixels×bits×fps each) and sum them to confirm host/PCIe/USB or network can handle the aggregate.

5. What is the Host Platform?

This is the place to map lanes, PHY, and ISP capability to your sensor choice.

Lane counts: many embedded SoCs support 2 or 4 CSI lanes. Example: Jetson Xavier NX/AGX exposes multiple CSI ports and can be configured for 4-lane operation; Raspberry Pi full boards typically expose 2 lanes (Compute Modules expose more). Always verify the exact board revision and connector pinout.

For detailed pin mapping and integration clarity, Vadzo provides platform-specific guides:

These resources help engineers quickly verify pin compatibility and reduce the risk of lane or connector mismatches during prototyping.

PHY choice (D-PHY vs C-PHY) :

D-PHY (common for CSI-2) typical line rates historically 1.5–2.5 Gsps per lane; modern D-PHY v2.0 supports higher rates up to ~2.5 Gsps (per-lane effective bandwidth ~1.5–2.5 Gbps depending on encoding).

C-PHY encodes symbols differently and can provide higher aggregate bandwidth with fewer physical lanes (e.g., up to ~5.7 Gbps per trio in older specs and higher in newer revisions). Choose the PHY based on SoC support.

ISP capability: host ISP handles debayer, noise reduction, HDR merge. Offloading these steps to the ISP reduces CPU/GPU load. If your ISP is limited, perform more preprocessing on the camera or choose a simpler sensor mode.

Driver & software: For Linux/Jetson, V4L2 and device-tree overlays are central. Confirm vendor provides DTS snippets and a tested driver (avoid late surprises). Community threads frequently show device-tree misconfigurations as common blockers.

Verification action: Map sensor modes to host supported modes (e.g., RAW10/12, RGB888) and test using v4l2-ctl / GStreamer early.

6. What is the Distance / Cabling (practical constraint)?

MIPI CSI-2 was designed for short PCB/cable runs. If your camera is not closely co-located with the SoC, choose a different topology.

Native MIPI cable/FFC: reliable over short distances; typical practical limits range from a few centimeters up to ~15–30 cm for high clock rates. For low clock rates, you may get >1 m with careful routing, but this is not guaranteed. Use retimers/re-drivers or specialized FFCs for longer runs.

Long-run options: use SerDes solutions (FPD-Link, GMSL) or use a remote deserializer close to the host. These protocols are designed for meters of cable and provide robust EMI performance.

Practical guidance: if camera-to-host distance >0.3–0.5 m, evaluate SerDes or move the host closer to the camera.

Verification action: Model the physical cable (length, shield, connector) early and, if necessary, order a cable sample to validate signal integrity at intended PHY rates.

Bandwidth Check: A Simple Calculation

Use this to estimate lanes required:

payload_bits_per_frame = width × height × bits_per_pixel

required_bandwidth_bps = payload_bits_per_frame × fps / overhead_factor (use overhead_factor ~0.8 to allow 20% protocol/encapsulation/idle overhead)

Example: 1920 × 1080 @ 30 fps, 10-bit raw:

payload = 1920×1080×10 = 20,736,000 bits/frame

bandwidth ≈ 20,736,000 × 30 / 0.8 ≈ 777,600,000 bps ≈ 0.78 Gbps

If using D-PHY at 1.5 Gbps per lane effective, one 2-lane link suffices (but validate host/PHY operating point). Tools like MIPI bandwidth calculators can help.

Practical Integration Checklist (Must-do items before PCB spin)

Confirm connector pinout and lane ordering. Some boards expect fixed lane mapping.

Obtain vendor device-tree overlay and sample driver; test on an eval board first.

Validate sustained throughput with worst-case frames; monitor packet loss.

Run thermal tests at target frame rates and duty cycles; log sensor and SoC temps.

Verify synchronization strategy (hardware trigger or sync bus) for multi-camera setups.

Prototype with real lighting and object motion to validate shutter choice and exposure strategy.

Plan for field updates (firmware, device-tree) and test OTA update path if required.

Early, automated regression tests accelerate certification and reduce surprises during the manufacturing ramp. The Vadzo team provides end-to-end assistance throughout the development cycle, from camera module selection and firmware customization to deserializer configuration and system-level validation. Reach us for more details or support:

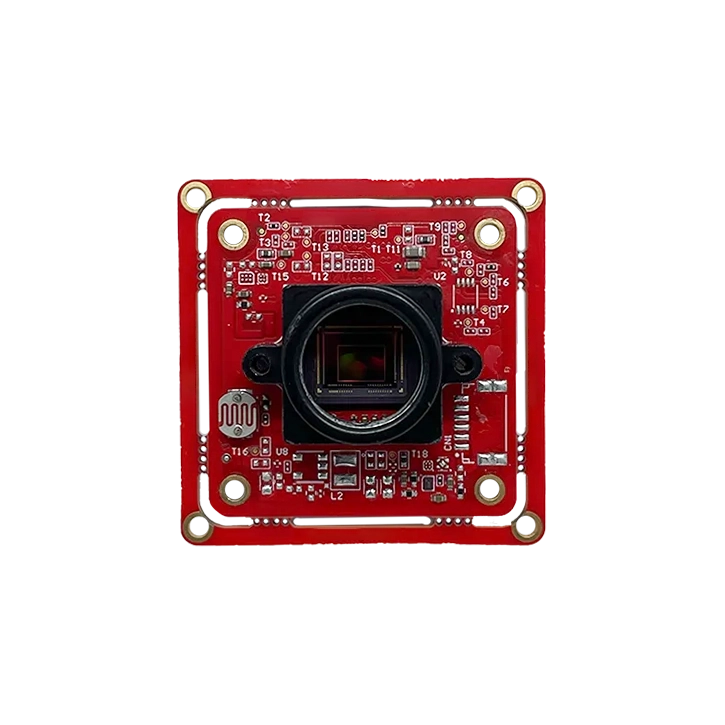

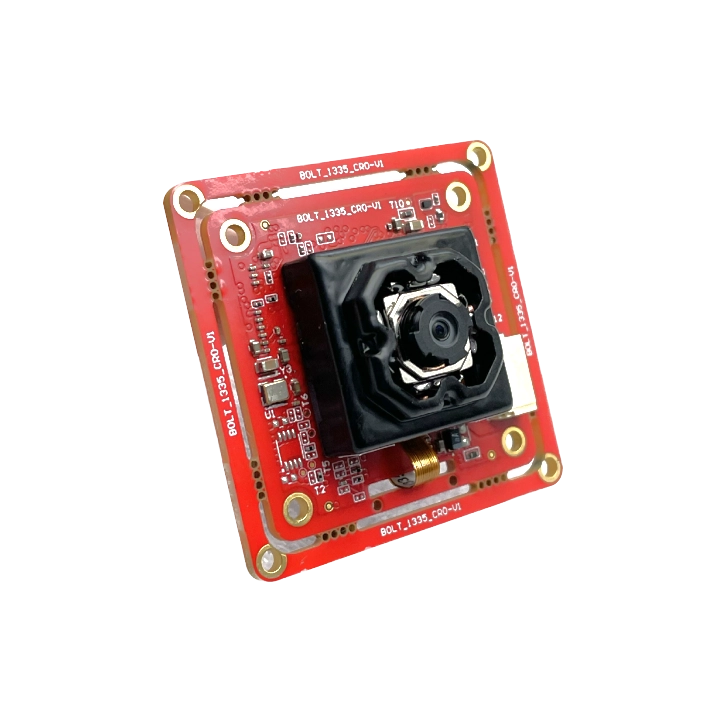

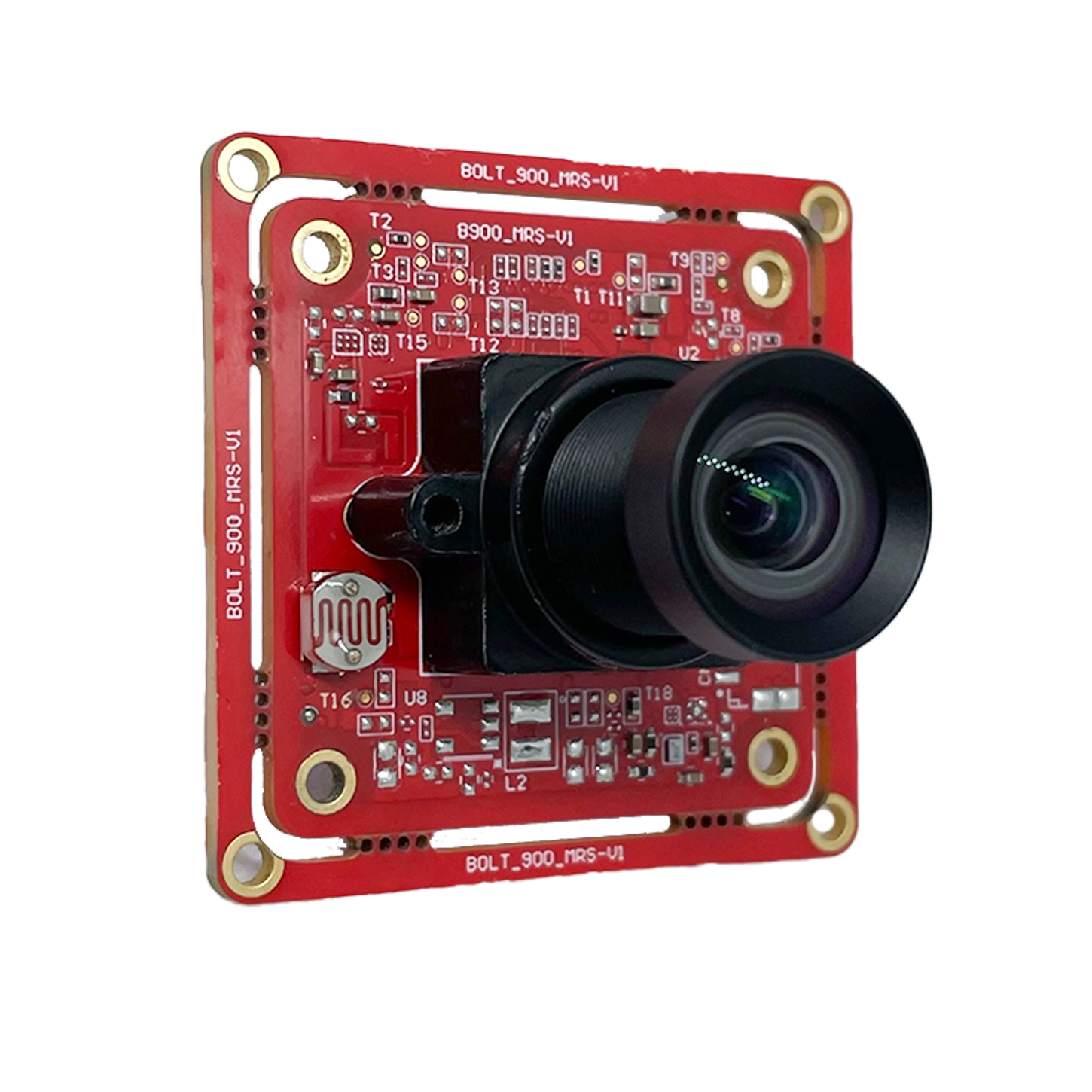

Vadzo Imaging & Support for MIPI Camera Integration

Vadzo Imaging provides a range of MIPI camera modules optimized for embedded platforms and offers engineering support for driver integration, device-tree configuration, and production hardening. Work with Vadzo early to align sensor selection, mechanical fixtures, and BSP support. This reduces integration time and ensures long-term production stability.

Technical FAQs for MIPI Cameras

1. How do I calculate the MIPI lanes required for my camera?

Compute payload_bits_per_frame = width × height × bits_per_pixel, then required_bandwidth_bps = payload_bits_per_frame × fps. Divide by per-lane effective data rate (check D-PHY/C-PHY per-lane rates) and include margin (use 70–80% utilization). Tools and calculators (e.g., MIPI bandwidth calculators) help automate this.

2. What is the practical maximum cable length for MIPI CSI-2?

Native MIPI CSI-2 using FFC is intended for short runs, with typical reliability up to a few centimeters to ≈0.3–0.5 m at high speeds; at lower data rates, you might reach ≈1 m with careful design. For longer distances, use SerDes (FPD-Link/GMSL) or add retimers/re-drivers. Don't rely on generic long cables without SI validation.

3. Should I use D-PHY or C-PHY?

Use D-PHY when your SoC supports it (ubiquitous). Use C-PHY when you need higher aggregate bandwidth with fewer physical lines and the host supports it. Check SoC datasheet and vendor support; C-PHY is more common in newer high-bandwidth designs.

4. Does Raspberry Pi support 4-lane MIPI cameras?

The standard Raspberry Pi single-board models generally expose 2 CSI lanes. The Compute Module exposes more CSI lanes and can support 4-lane cameras; Jetson developer kits commonly expose 4-lane support (check specific board docs). Validate per board revision.

5. What are common device-tree pitfalls when integrating a MIPI camera on Jetson/Linux?

Common issues include incorrect lane mapping, wrong clock/reset lines, sensor mode index mismatch, and missing ISP nodes. Always use the vendor's dtsi snippets and test with dmesg/kern.log to trace driver initialization. NVIDIA documentation and community threads are invaluable for Jetson specifics.

6. How can I reduce bandwidth without lowering resolution?

Use ROI (transmit only region of interest), pixel binning, subsampling, or reduce bits per pixel (from 12 to 10). Hardware compression is an option, but it increases latency and processing complexity; use only if necessary.

7. When must I select global shutter over rolling shutter?

If the scene includes fast motion where geometric fidelity matters (robot arms, spinning shafts, high-speed conveyors), pick global shutter to avoid rolling skew and measurement errors. Otherwise rolling shutter may save cost and power.

8. What is the best way to synchronize multiple MIPI cameras?

Use hardware triggers (GPIO trigger lines) for frame start; for distributed networked cameras, use PTP/IEEE-1588 and a shared timebase. Software timestamps alone are insufficient for frame-accurate synchronization.

9. How do MIPI PHY data rates translate to effective lane bandwidth?

D-PHY symbol rates (e.g., 1.5–2.5 Gsps) convert to lane bandwidth after accounting for encoding and lane overhead. Use vendor PHY matrices (D-PHY or C-PHY whitepapers) to compute usable bits/sec per lane. Always leave margin for overhead and skew.

10. What test tools should I use during integration?

Use v4l2-ctl and GStreamer pipelines on Linux for stream validation; hardware logic analyzers and SerDes evaluation boards for PHY/SI debugging; dmesg/kern.log for driver issues; and thermal logging for long-run tests. Vendor SDKs and sample apps speed initial validation.